why google is plotting galactic conquest with a digital con man

In a field already known for wild hyperbole, a Google executive aims for the stars.

A well dressed middle aged man announcing that galactic conquest is just around the corner sounds like the opening of a sci-fi novel or TV show set over a thousand years in the future at the very least. Yet this is Demis Hassabis’ claim. According to him, the chatbots of today will achieve that long fabled AGI escape velocity and start working to colonize the galaxy around 2030 or thereabouts.

Well, that’s bold, especially coming from a man who won a Nobel Prize for creating an AI that simulates protein-folding, enabling all sorts of very useful medical research. At the same time, considering that the closest thing we have to interstellar spacecraft is two probes we launched almost half a century ago, the idea that we’ll be setting solar sail for Proxima Centauri in five to ten years definitely makes me furrow an eyebrow in skepticism. This schedule seems less aggressive and more delusional.

One of the biggest reasons why is that despite the increasingly grandiose claims that chatbots are about to become our sentient, hyper-intelligent overlords, the data does not suggest this at all. In reality, they struggle mightily with anything beyond mapping words, concepts, and phrases. Which is perfectly fine, this is what they were built to do in the first place, to translate between natural human speech and machine indexed data and concepts. It’s when you try to make them an everything model that things go awry pretty quickly.

For example, take an article from The Times in which journalist Hugo Rifkind shares a bizarre story about a political news editor who wanted to use ChatGPT to help punch up his podcast and found that the chatbot simply created a whole episode before he even had a chance to upload what he recorded. Rather than help, the bot shoved the human out of the way, took over, and vomited a stream of words, stats, and jokes that had nothing in common with reality. That’s a bit of a problem when you’re trying to do a podcast about, you know, actual news that are real and happening.

how to pretend to understand the universe

While you’re often told that no one really knows why LLMs hallucinate like this, we do know what’s happening under the hood. Chatbots do not have concepts in the same way we do. We understand that, for example, LeBron James is a person and he plays basketball because we have very concrete definitions of what those things are. There is even some research indicating that we have specialized neurons whose job is it to track every permutation of those concepts and how to process them.

We can visualize the game, hear the noise of it, imagine the players playing it. LLMs? Not so much. They understand the link between LeBron James, person, athlete, and basketball because they’re frequently mentioned together in their training sets. Their model of the world is entirely probabilistic and depends on how closely together all of the relevant tokens are in their vector databases. If they get those thresholds wrong, they’ll spit out completely incorrect answers just because their numerical description in the database was similar enough and within their margin of error.

Likewise, because they have no conception of fact, fiction, or causality, when you ask them a question, they’re going to spit out something that makes sense as text, not as an accurate, factual response. The hope is that having ingested enough facts, output and factual data will intersect more often than not, and they will end up regurgitating reality rather than fiction. Except in the immense amount of data they consume, there is plenty of room for fact and fiction to cross as well, or for facts to never be mapped the way they’re expected, so the response is just pseudo-randomly assembled.

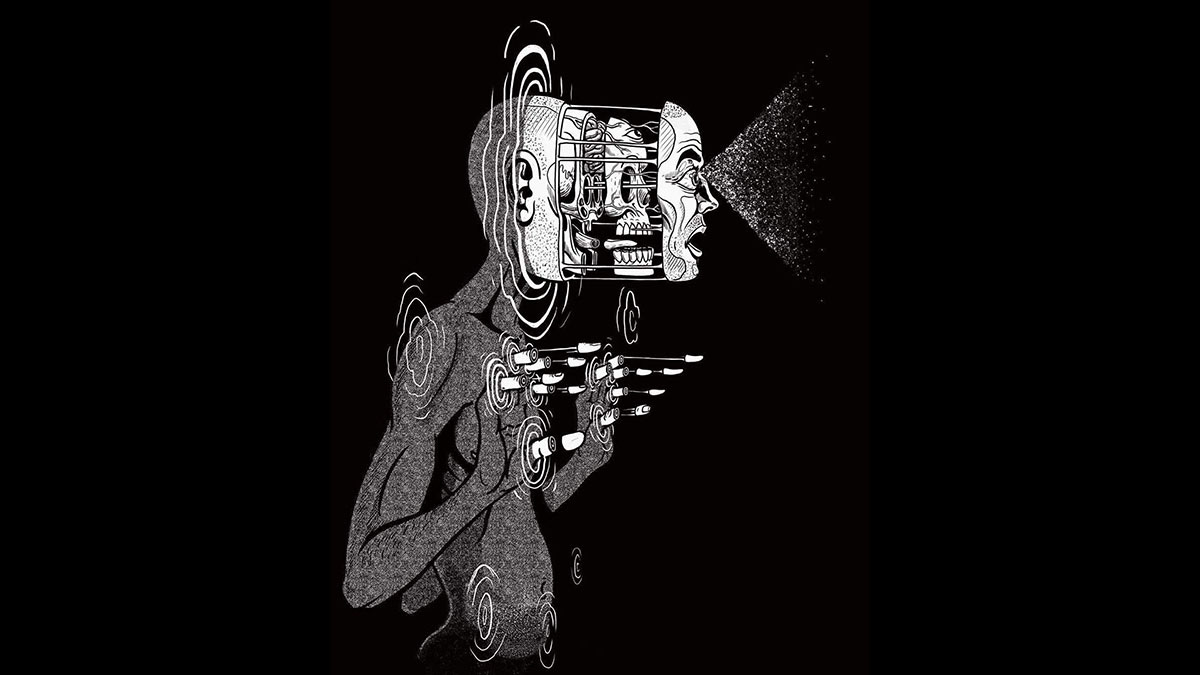

This happens all the time with LLMs, and the larger they get, the more it happens as the model becomes too large and unwieldy to map relationships accurately. Things get even worse when you start training AI on AI-generated content as it recursively amplifies whatever hallucinations it ingests. So, the bigger and more elaborate LLMs get, the more they become very fast, very confident, very inaccurate conmen we’re constantly being told to rely on for answers to life’s questions by tech execs.

admiring the emperor’s new a.i.

Just imagine Hassabis’ super-LLM very confidently telling scientists that it solved the problem of warp drives and all we need to make one is two meters of kryptonite and a liter of dilithium kyber crystals. It will be very wrong. But it will be very confident, and at this point, I can’t put it past politicians and tech bros to demand scientists listen to the chatbot and that replying to their directives with “those are fictional substances, and not even in the right units” should be taken as nothing more than lazy excuses by AI-phobic losers. Who should be replaced by said AI.

Ultimately, this is the problem with breathless hype and technobabble. To stay in the news and advertise their wares, tech executives have to be more and more hyperbolic but also can’t back down because their investors take them too seriously. This is why on the one hand, they’re promising galactic conquest with an AI oracle while in reality, they’re struggling with having chatbots not tell people to eat glue, but also promising that any problem you see today will be solved in a year, tops.

Except no, they’re not actually getting solved because they’re fighting math with little more than belligerent hype and feeding more flawed data into models pushed far past their optimal size and applied for the wrong tasks. My guess is that by 2030, we’re not going to be colonizing the galaxy and chatbots will still be confidently lying to us while the apps on our devices pretend no other answers exist or are valid. That seems to be the course we’ve chosen to take as a society for some inexplicable reason.