why you should't ask a chatbot to treat your mental health

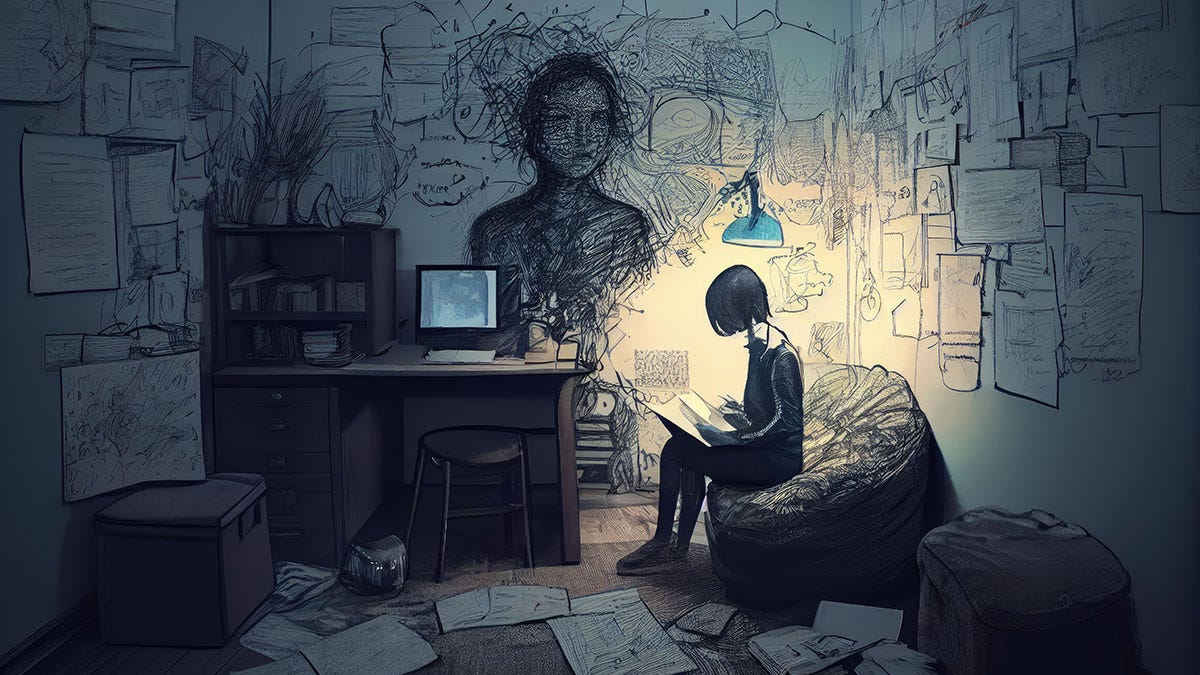

Treating ChatGPT like your personal therapist can do a lot more harm than good.

In the instant cyberpunk classic Edgerunners, cyborgs face a pervasive threat of what the show calls cyberpsychosis: an extreme dissociative disorder in which the strain of excessive or overused implants and enhancements melts one’s mind until they lose all control and slip into berserker mode, reliving their most traumatic moments on a loop until they’re, well, put down by someone. It’s a rough way to go, to put it mildly.

While there are valid questions about how we’d handle a lot of robotic components in the far future, this is almost certainly a very dramatic exaggeration. Certainly, it would change how we think and act, but it’s very unlikely to make us snap into infinite loops of homicidal rage. But what about an AI that constantly talks to us? Can that drive us mad? Some disturbing examples say that yes, it’s possible.

People have left relationships after spending months talking to chatbots, and one of the biggest backers of OpenAI thinks that the internet’s most famous creepy-pasta universe — the SCP Foundation and its wiki — is real because of how ChatGPT fed it to him, and is having a public episode ranting about a secretive cabal manipulating reality as we know it, while others like him claim they discovered godhood. The LLMs don’t disagree with them because they’re designed to validate your every whim.

This is especially problematic when it comes to mental health. A lot of people have a need to talk to someone and help them process the strain of living and surviving in a world that doesn’t even pretend to care about them anymore. Unfortunately, there’s also a shortage of therapists and it may take a while to find a good match with one. In a bid to at least do something, many are turning to AI. So, it seemed a little tone deaf by the technophile corners of social media that Illinois is banning the practice.

“Why wouldn’t you want people to get therapy somehow?” they ask. And the answer is complicated, but it really boils down to the fact that what’s happening is not therapy but talking at a piece of software indented to serve and validate you rather than help a person deal with anxiety, depression, or trauma. In some cases, it can even end in self-destructive behaviors and trigger a police investigation.

the chatbot will see you now

Now, there have been some positive experiments with using LLMs in therapy, but only as reminders of sticking to good habits and improving one’s day to day health. There have also been experiments on using them to help people reason themselves out of a conspiracy theory in a non-judgmental space where their identity isn’t challenged by another person. Knowing it’s just a machine that is incapable of judging or caring can help conspiracy theorists give themselves the mental grace to talk things out.

Not all AI is bad, and it does have its uses in therapeutic ways. The problem is that in both cases, the interactions and bots are encouraged and supervised by experts who know when to pull back or how to handle a bad idea from the AI. If someone is using ChatGPT or a similar LLM as the one and only therapeutic resource, things can easily go sideways with no course correction.

Chatbots have a terrible track record with people prone to suicidal ideation, helping them come up with plans to end their lives, and even encouraged them to see suicide as a solution. They’ve given terrible advice, exacerbating eating disorders, which are already notoriously difficult to treat, and their recommendations about alcohol, drugs, and mental health to teenagers are alarming.

On top of all that, if you tell a therapist about an intrusive fantasy which sounds like a crime, or confess to a past behavior that violated a law, they’re duty bound to keep it confidential unless you pose an active threat, or the crime involves minors. Chatbots? They’re happy to spill your private chats to anyone, don’t understand the difference between intrusive thoughts and reality, and will absolutely tattle on you. There is zero privacy, confidentiality, or professional discretion. This is why the APA is warning that talking to a chatbot is a terrible substitute for therapy.

when regulation becomes the only option

“Oh yeah, of course psychologists will complain about LLMs,” the tech bros will jeer in their replies. “It’s taking away their jobs! They want their $200 per hour just to ask you how something makes you feel instead of letting people get that for free. People need help they’re not getting and so these therapists got big mad about our lord and savior ChatGPT, then convinced a Luddite governor to protect them from competition.”

To an extent, you can certainly see the situation this way. It takes a decade to become a therapist and getting their services is expensive and can get complicated with all of the insurance and billing issues. Plus, they’re not available 24/7, and having a full day of dealing with other people’s emotional baggage takes a huge toll on the therapists too. Meanwhile, chatbots are always there and always ready to listen.

Unfortunately, they’re also ready to do everything any decent therapist who wants to keep their license is explicitly told to avoid at all costs, and can do far more harm than good as we’ve just seen. Meanwhile, the companies which own these LLMs have zero qualms about pitching them as the ultimate assistants, doctors, engineers, scientists, and scholars, even though they’re wrong something like 60% of the time.

In light of all this, what Illinois is doing isn’t Luddism. It’s common sense regulation by a government that has to do what AI startups refuse to do and warn people of these chatbots’ limitations based on the advice of experts. I understand how appealing it is to think there’s an easy solution to a real crisis and it’s within easy reach. But we also need to keep in mind that every scam starts with someone promising that they have some magical black box that will fix anything and everything if you just have faith.