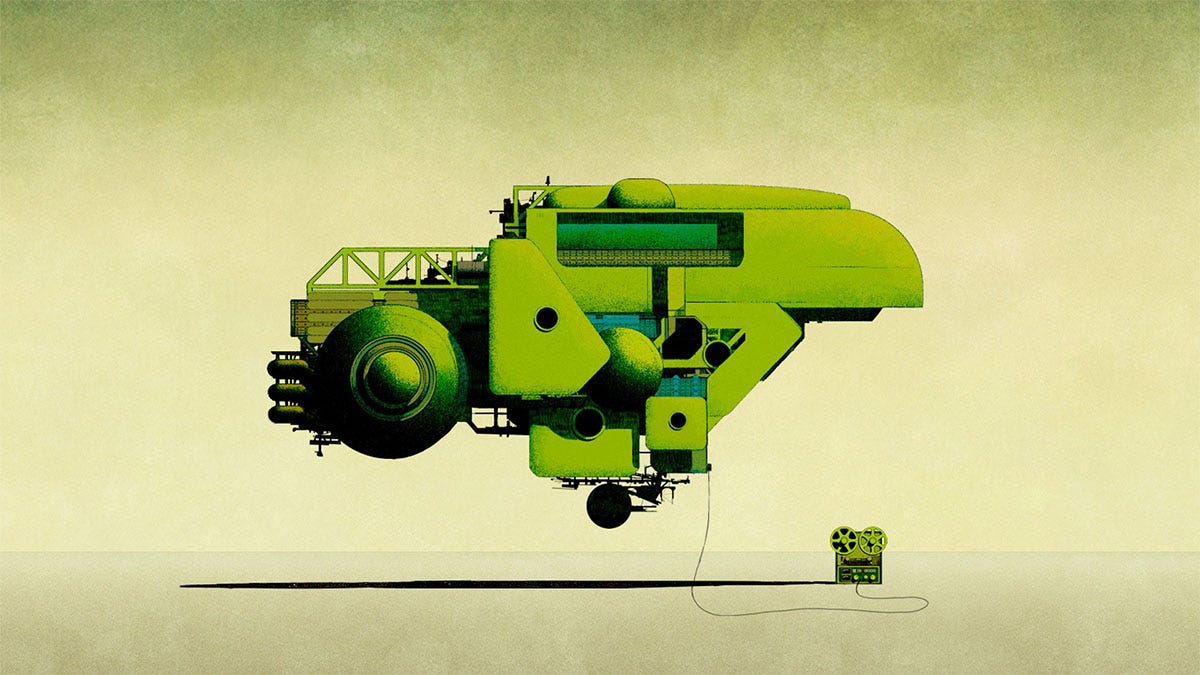

making music no one needs to hear

Video platforms are awash in AI slop. Now, it's coming for music and podcasting too, even though no one is excited about it...

A new, insidious business model is taking over media platforms. Use a generative AI tool to make either low-effort videos at industrial scale, or scrape Reddit content and superimpose it on a background of Minecraft parkour, or Subway Surfers, or a similar game, have an AI voice it, and post up to six times a day. When people play them, or stumble across them, the account owners get ad revenue with minimal effort.

Now, a similar plague is coming for music platforms too. One of the biggest and most viral new bands on Spotify earlier this year was Velvet Sundown. If that sounds like a weird mutation of the supergroup Velvet Revolver rather than a real band, you’re spot on. (And can also read obvious context cues.) The band, its music, its backstory, and its promotional images were all generated by AI.

Likewise, users have been complaining about an explosion of fake artists with clearly AI-generated music which seems to come more or less directly from Spotify itself in a transparent bid to redirect revenues from tens, if not hundreds of millions of streams from artists into the platform’s pockets. If the artists don’t exist and their background muzak wasn’t composed or produced by human effort, there are no royalties to even think about until enough people complain that they don’t like the content.

as my music bot gently bleeps…

And it’s not just music. At least one brash AI startup is planning to flood us with 5,000 new podcasts generated by generative models, calling anyone who objects to losing the ability to reliably discover human made work “lazy Luddites.” Yes, a charming and convincing argument for creating millions of episodes of robots talking at you that no one asked for indeed, although I do wonder if five minutes with a PR professional may have helped improve the pitch just a little bit.

How pervasive is AI slop? Well, Spotify, YouTube, and Instagram aren’t very open with their stats, but their users are complaining that it’s become a significant portion of all feeds in the last year or so. One of the most solid stats out there comes from a French music streaming service called Deezer, which complains that it gets 30,000 AI tracks daily, almost a third of all the tracks being uploaded to its servers.

Even worse, it claims that between two thirds and three fourths of plays for the tracks in question are from bots, a common problem with AI content on every other platform as well. This means cloud services flooded with fake videos, books, and music from fake creators, taking up bandwidth and increasing their storage and bandwidth costs, but few are actually watching, reading, or listening to them on a regular basis and the platforms’ customers — the advertisers — are getting ripped off in this supercharged extension of the doom loop that’s killing the web as we know it.

how fraud becomes a business model

If content uploaded to be occasionally stumbled upon by humans but primarily played by bots with the sole purpose of vacuuming up ad revenue sounds a lot like a front for a money laundering operation, you’re right. That’s pretty much what it is, only there’s not much the platforms can do other than ban the content and the bots, which would just show up again using different accounts and regenerated to avoid detection.

The open nature of social media platforms makes it far too easy for anyone to sign up and start contributing because adding friction to uploads goes against their business model. On top of that, many of these slop channels and bot farms are in countries ripe with criminal organizations paying off law enforcement not to shut them down and an account takedown is just a minor inconvenience.

We see the same dynamic with everything digital today, from the review bots trying to game Amazon, to political influence campaigns, to this basic ad fraud scheme which has been automated for exponentially greater impact than it otherwise would without generative AI. Unfortunately, the giants have little incentive to really tackle bots since it’s an extremely difficult problem to begin with, and as long as they can shrug, say it’s just too hard to root them out and still get billions in ad pre-sales every fall without the industry holding their feet to the fire, why even bother?